[GAMES 202] Lecture Notes 3

Shadow Mapping

A 2-pass algorithm:

light pass: recording depth, render-to-texture (in a separate framebuffer);

camera pass: reproject camera-visible fragments to get depth from light.

An image-space algorithm: no need of knowledge of scene geometry. Cons: self-occlusion and aliasing (caused by low precision and resolution)

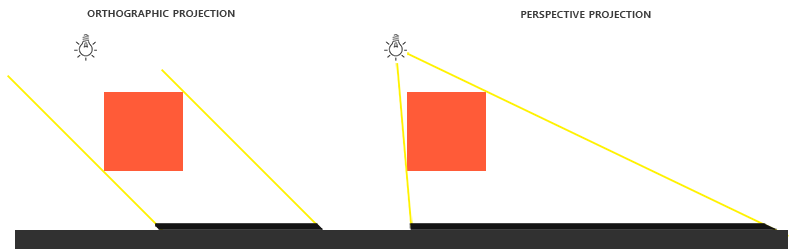

Implementation details: whether shadow texture comparing real camera-space (i.e. orthographic projecting) or perspective projected is OK, as long as consistent. The orthographic projecting is often used in directional lights.

Issues

Self occlusion

z-fighting (precision problem, for assuming a constant depth within the area in a pixel, which may have a varing depth value in reality.)

Solution #1: adding a bias when comparing depth values ( may adaptive to light incident angle); Cons: detatched shadow (peter panning) happens when is too big.

Solution #2: second-depth shadow mapping using midpoint between first and second depth value (may get with face-culling?) in shadow mapping. Cons: the watertight-object requirement and large overhead. Other solutions: over sampling, …

Aliasing

when projecting shadow of a small object to a far-away surface… Solution: Cascaded Shadow Mapping, CSM, PCF, …

Multiple light sources? shadow maps and computational cost! (optimization methods exists)

Approximations in RTR

An important approximation:

It’s more accurate when: (at least 1 condition is achieved) 1 the integrated area is small (small support) 2 the integrand is smooth

Applications: Decoupling the visibility term (separate process: first shading, then do shadow mapping).

Constraints:

1 When small support: point / directional lights:

2 Smooth integrand: diffuse bsdf / constant radiance area lighting

PCSS: Percentage Closing Soft Shadow

PCF: Percentage Closer Filtering

source from nvidia Anti-aliasing the shadow’s edges: filter the shadow map depth-comparing results, around each point, average the visibility results. Filtering size: small->shaper, lager->softer

Issue: sometimes the softening effect went to far. (especially when the shadow is close to the blocker, which makes the unrealistic visual effect). Notice the noisy shadow nearing 202chan’s hair:

From PCF to PCSS: the adaptive filtering PCF

Guess: very large filter will achieve soft shadows? Key observation: the more distant from blocker, the softer the shadow Conclusion: filter size -> relative average projected blocker depth

Steps:

1 Blocker Search: average blocker depth from shadow map (note: [1] the shadow map by taking the area light as a point light; [2] the search area can depends on the area light size and receiver’s distance from light. )

2 Penumbra Estimation: use the ave blocker depth to determine filter size 3 do PCF using the adaptive sized filter

PCF shadows PCSS shadows

Banner character: ねり by しらたま from 星空鉄道とシロの旅